{zhang.13253, mo.169, sun.397, su.809}@osu.edu, wenhuchen@uwaterloo.ca

Text-guided image editing is widely needed in daily life, ranging from personal use to professional applications such as Photoshop. However, the existing language-guided image editing methods are either zero-shot or trained on automatically synthesized dataset, which contains high volume of noise. Thus, the existing models requires lots of manual tuning to produce desirable outcome.

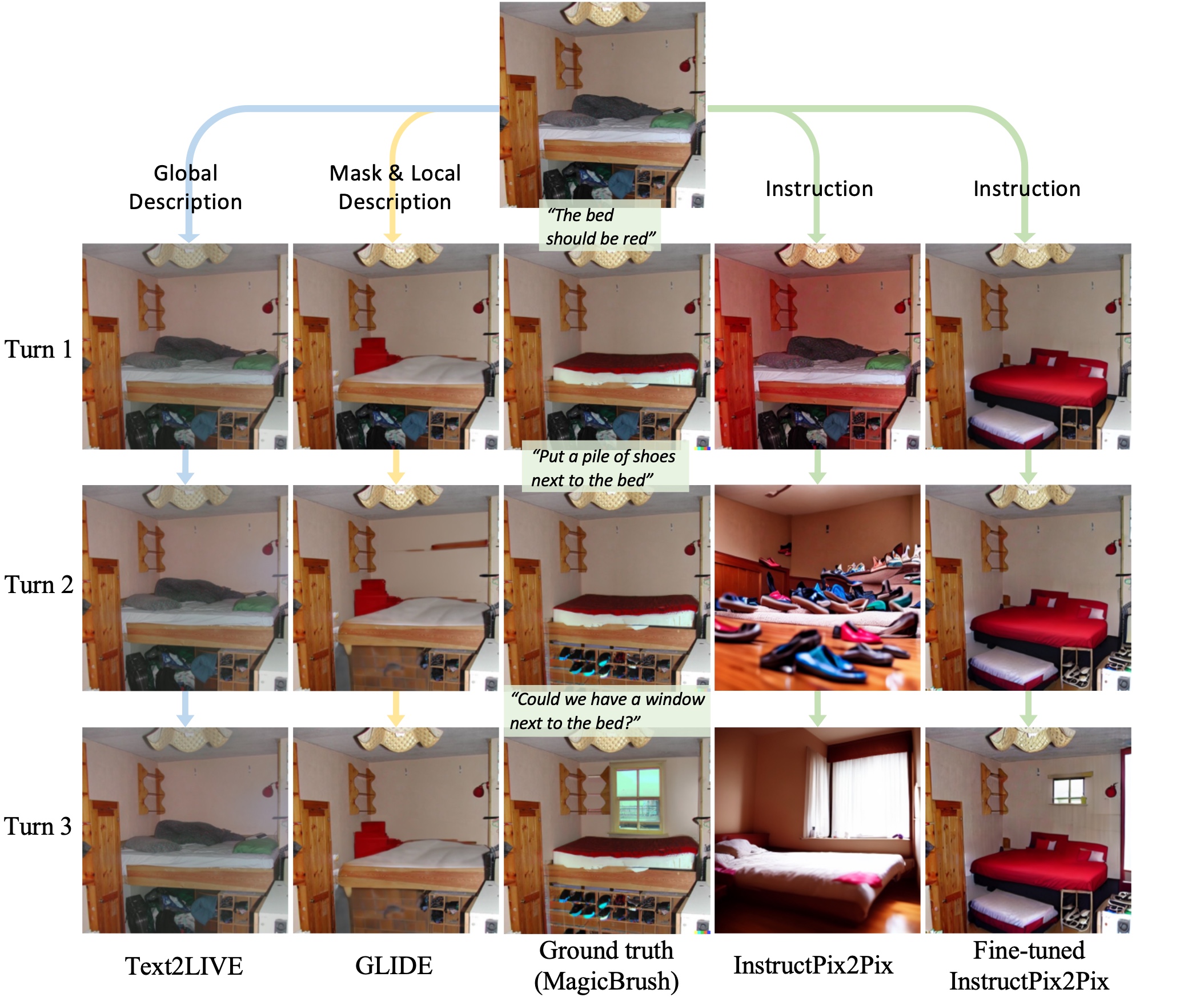

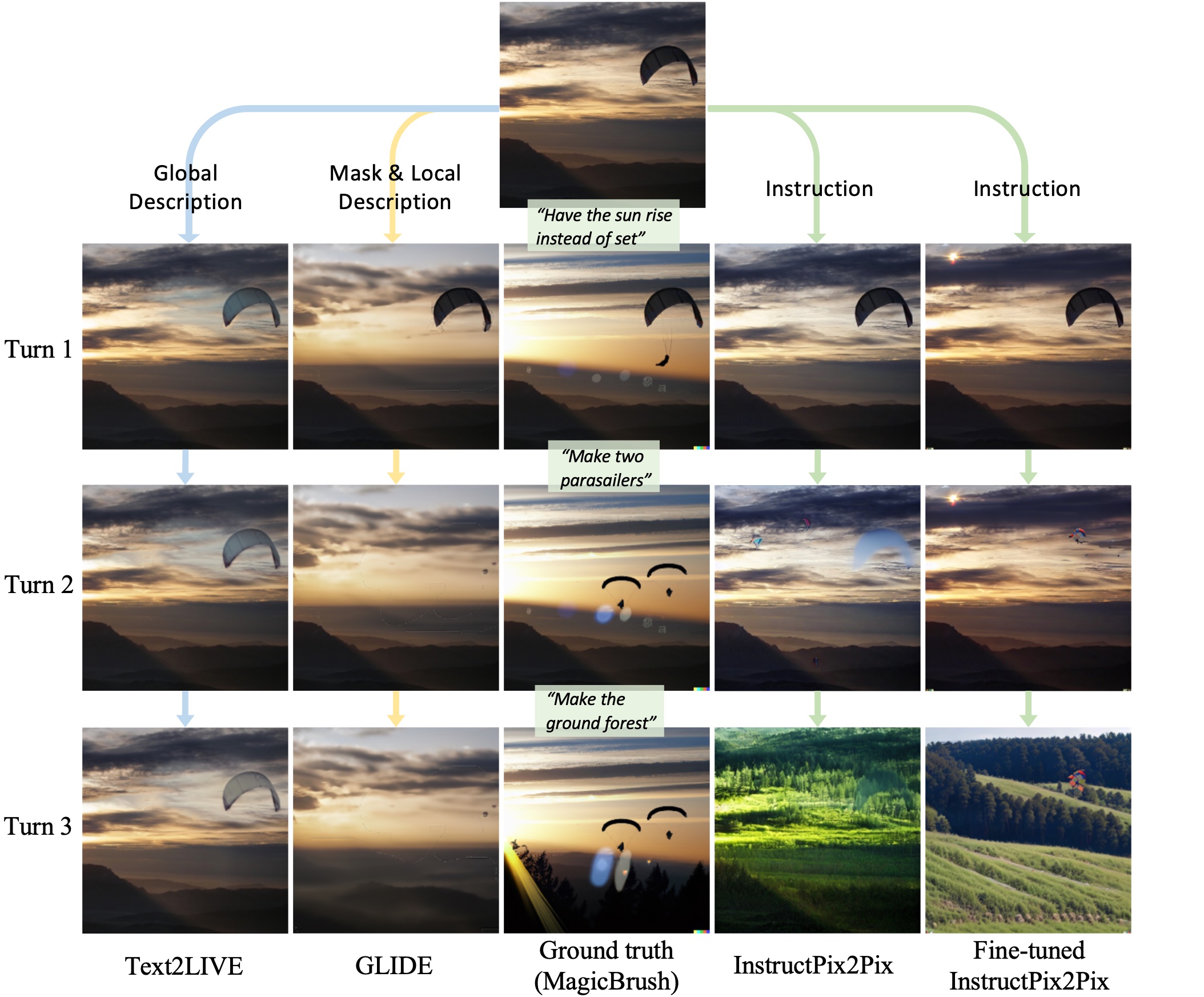

To address this issue, we introduce MagicBrush, the first large-scale, manually-annotated instruction-guided image editing dataset covering diverse scenarios single-turn, multi-turn, mask-provided, and mask-free editing. MagicBrush comprises 10K (source image, instruction, target image) triples, which is sufficient to train large-scale image editing models. We fine-tune InstructPix2Pix on MagicBrush and show that the new model can produce much better images according to human evaluation.

We further conduct extensive experiments to evaluate current image editing baselines from multiple dimensions including quantitative, qualitative, and human studies. The results reveal the challenging nature of our dataset and the gap between current baselines and real-world editing needs.

MagicBrush consists of over 5K edit sessions and more than 10K edit turns. The figure below provides the data splits, as well as the distributions of sessions with varying numbers of edits.

Meanwhile, MagicBrush includes a wide range of edit instructions such as object addition/replacement/removal, action changes, color alterations, text or pattern modifications, and object quantity adjustments. The keywords associated with each edit type demonstrate a broad spectrum, covering various objects, actions, and attributes as shown below.

Results of eight models, and ground truth images in MagicBrush in single-turn editing scenario. The input and ground truth images are the leftmost images.

Furthermore, we fine-tune another recent image editing model HIVE (built upon Stable Diffusion 2.1) on MagicBrush and provide some qualitative comparisons here.

@inproceedings{Zhang2023MagicBrush,

title={MagicBrush: A Manually Annotated Dataset for Instruction-Guided Image Editing},

author={Kai Zhang and Lingbo Mo and Wenhu Chen and Huan Sun and Yu Su},

booktitle={Advances in Neural Information Processing Systems},

year={2023}

}